Cache tagging first appeared on my radar when I started working on Doctrine ORM in 2009 and the idea sounds extremely useful in theory. With cache tagging, cache entries can be grouped together under tag names, which then allows to simply invalidate all items with the same tag.

But When the Doctrine 1 team released cache tagging as a list of all members with a tag, suddenly users saw extreme slowdowns in their apps. For various reasons it is a mistake to introduce a cache key that is read and written to from essentially every request of the application, because it can cause massive performance problems when too many items are part of the same tag.

To guarantee consistency, caches use locks when they are being written to. If the same key is being written to at a very high rate, this can lead to considerable slow downs beause of locks. Another problem is the serialization overhead when cache tags contain a large amount of items.

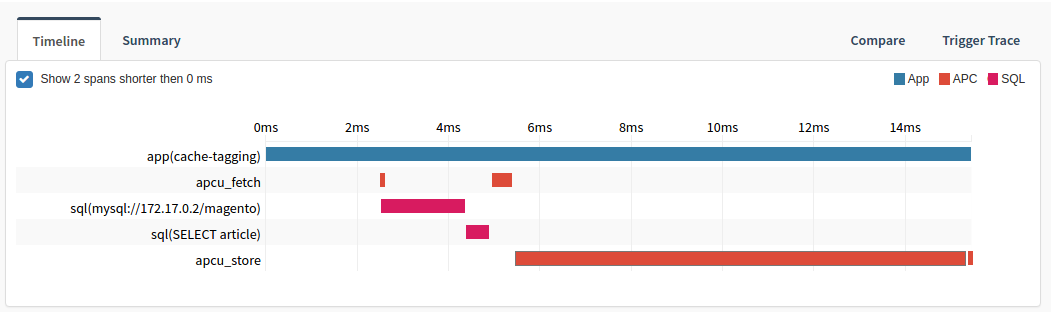

See this Tideways timeline with cache tagging using APC over 40.000 items with a shared key. The long apcu_store timespan of 10ms is the cache key being written.

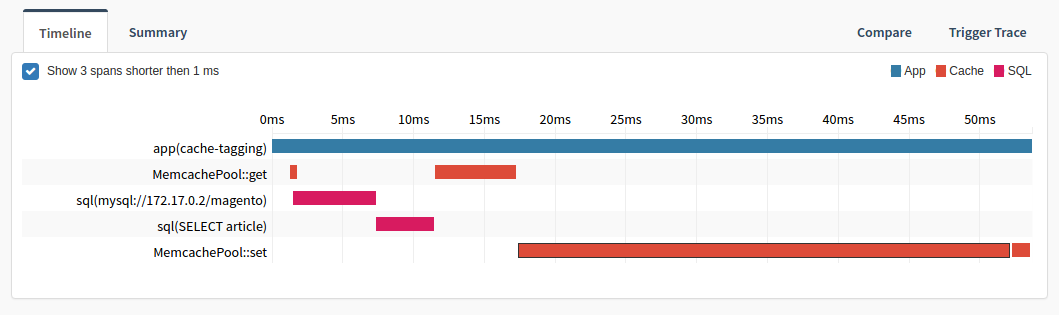

This effect is much worse when using Memcache, because it has to serialize the list of keys where APCu just copies the memory. in the same case of 40.000 items with a shared key it takes 5ms for MemcachePool::get and 34ms for MemcachePool::set to access and update the shared tagging cache key:

Naturally in Doctrine 1 the feature was immediately removed and we never reintroduced it back into Doctrine 2.

But even today many cache tagging implementations built on top of APCu or Memcache suffer from this problem and you should carefully evaluate them before use. Be wary about libraries that don’t explain how their cache tagging works internally. Only backends with second level indexes (Databases, Riak, Mongo) or sophisticated datastructures (Redis) are suitable for implementing efficient cache tagging.

So please remember, before using cache tagging as a quick fix for your cache invalidation problems: Without the right cache backend and a special purpose implementation for tagging you will quickly suffer from bad performance that you wanted to fix with caching in the first place..

There is one way to get it right, without heavy writes and serialization overhead. The Laravel cache implements this approach by storing a unique id for every cache tag and then prefixing the key of items in this tag with this unique id. To invalidate all items in a cache tag you must just regenerate the unique id and all the old cache keys are not accessed anymore. A simple reimplementation in APCu looks like this:

<?php

function apcu_tagged_store(array $tags, $key, $value, $ttl = 0) {

return apcu_store(apcu_tags($tags, $key), $value, $ttl);

}

function apcu_tagged_fetch(array $tags, $key, &$success) {

return apcu_fetch(apcu_tags($tags, $key), $success);

}

function apcu_tags(array $tags, $key) {

$ids = [];

foreach ($tags as $tag) {

$ids[] = apcu_entry("tag_" . $tag, function ($key) {

return uniqid('', true);

});

}

$ids[] = $key;

return implode('|', $ids); }

$article = ['id' => 100, 'category' => 20, 'text' => '...'];

apcu_tagged_store(['articles', 'category_20'], 100, $article);

$article = apcu_tagged_fetch(['articles', 'category_20'], 100); Explaining the apcu_tags function: It generates a unique key for every tag and concatenates them all as a prefix for the actual cache key. Then if you invalidate one of the tags, all the cache entries with this tag get regenerated on the next access.