Journey of Profiling a Slow Development Setup

While working on the migration from Vagrant to Docker with Compose in our development setup, we were seeing extremely slow responses in the browser and I immediately made a mistake to assume I know the problem without measuring.

A story in 7 acts.

1. Making the wrong assumptions

From common experience, the first thing to blame was Docker Volume Performance. So I optimized the obvious issues, that you can find in the many Docker on Mac Performance blog posts around the web:

- Set the volume to

:delegatedor:cached(Edit: Turns out this is moot, but you’ll find it on the internet everywhere) - Do not share the Composer and NPM/Yarn dependency folders into the container

- Enable the new experimental VirtioFS filesystem in Docker Desktop for Mac

- Regenerate the Symfony cache via docker-compose exec to make sure the cache generation is not holding up the request.

2. Reality breaks assumptions: Why is cache generation quick?

At that point I had a realization:

Using „docker-compose exec app php /app/bin/console cache:clear“ generated the cache in 3-4 seconds. If volume performance was the problem, then this CLI script should also be slow. Yet 3-4 seconds is pretty quick for generating the cache of our large Symfony application.

So it is most likely not be Docker volumes that are slow, but something in the Nginx, PHP-FPM or our application stack.

3. Identify where slowness comes from

To identify where to dig next, I executed the front controller „index.php“ script from the CLI as well.

$ docker-compose exec app php /app/web/index.phpThis gave a long 60 second response, which leads me to conclude that our PHP application is slow and not Nginx or PHP-FPM. I call this divide and conquer debugging, splitting up the potential causes and eliminating them one by one.

4. Identify what causes the slowness

I installed the tideways CLI into the Docker container next and re-ran the index.php script from the CLI with:

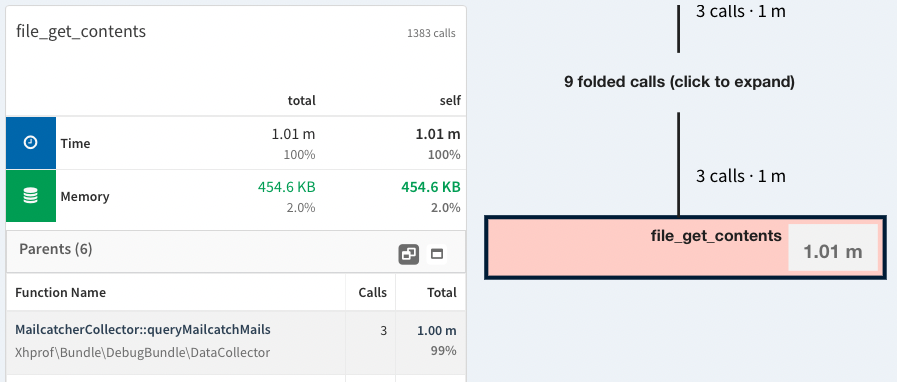

docker-compose exec app tideways run php /app/web/index.phpClicking on the output response to find out where the 60 seconds are coming from: Our own code making a HTTP request to a host that is not available using file_get_contents:

This is only a debug call, so its using file_get_contents and its default timeout of 60 seconds is what is causing the problem.

5. Setting Timeouts on HTTP calls

A case of not following our own advice of always setting timeouts on HTTP calls. We have a blog post on „Using HTTP Client Timeouts in PHP“ and Tideways itself warns when the default socket timeout is not adjusted to a lower value through an observation.

So changing the code to use a timeout:

private function queryMailcatchMails() : array

{

$context = stream_context_create([

'http' => ['timeout' => 1.0, 'ignore_errors' => true],

]);

$data = @file_get_contents("http://mailcatcher.local:1025/api/messages", false, $context);

// ...

}Still the same slow response! Turns out, since mailcatcher.local is a domain that does not resolve with DNS, PHP is hanging in dns resolving, which for some reason is not affected by the stream timeout settings!

6. Rewriting with Symfony HttpClient

So I did a rewrite with Symfony HTTP Cilent for the code, since we use that in many other places of the code base already:

private function queryMailcatchMails() : array

{

$client = HttpClient::create();

try {

$response = $client->request(

'GET',

'http://mailcatcher.local:1025',

options: ['timeout' => 1, 'max_duration' => 1]

);

$file = $response->getContent();

} catch (TransportExceptionInterface $e) {

return ['count' => 0, 'last' => []];

}

// ...

}

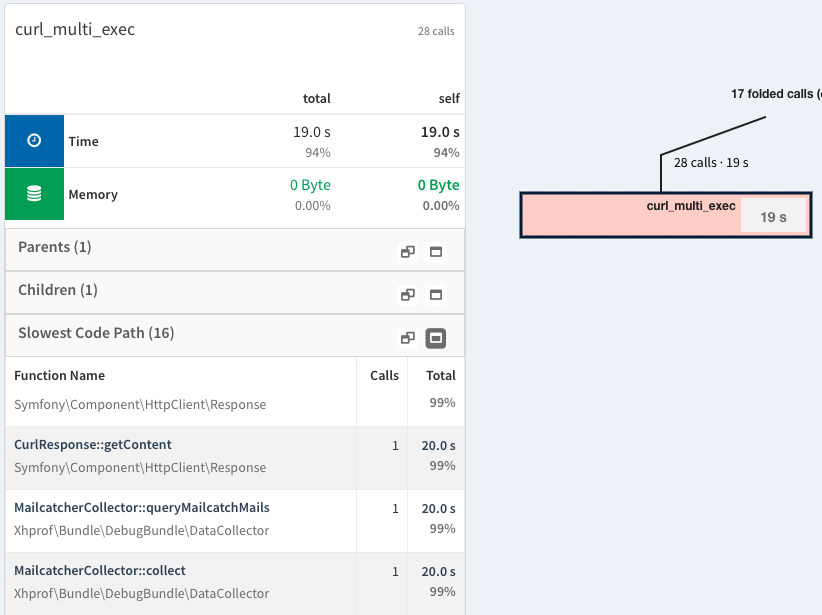

That did not work either, because of a bug in Symfony HttpClient only seting CURLOPT_TIMEOUT but not CURLOPT_CONNECTIONTIMEOUT. We still have 20 seconds spent in HTTP requests.

I have filed a bug report and will spend some time proposing a PR in the next few weeks.

7. Fixing the underlying problem: New hostname

This journey started with the migration from Vagrant to Docker, the mailcatcher.local domain was used in Vagrant, but it did not exist in Docker (yet). Once the mailcatcher service was running in the Docker Compose setup and the code changed to talk to the right HTTP host everything was fast again.

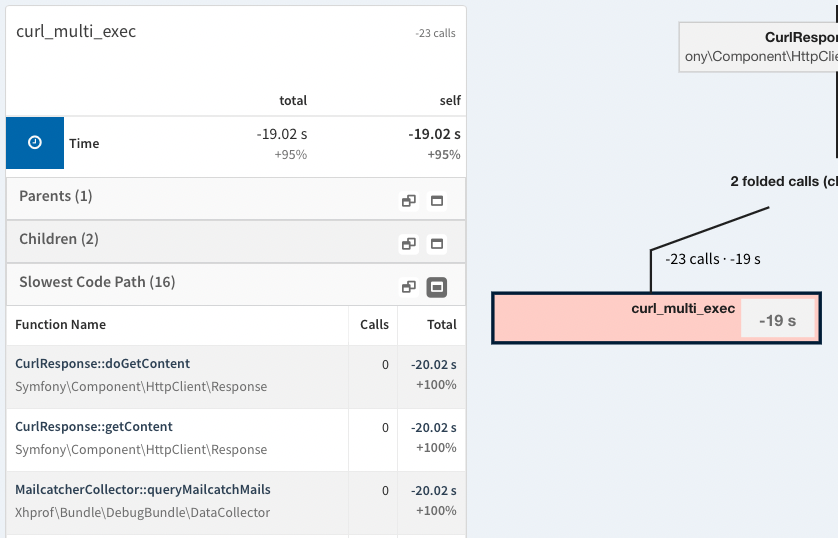

Here you can see the comparison of the old vs the new code in a callgraph comparison in Tideways: curl_multi_exec is now 19 seconds or 95% faster than before.

The moral of the story: don’t make assumptions without measuring, otherwise you might spend a lot of time optimizing something that is not slow.

In our case the Docker volumes were quick and response anyways, the problem was in the application and specifically the unavailable HTTP host.