What is the best value for max_execution_time in PHP?

By default PHPs maximum execution time is set to 30 seconds of CPU time. Since your webserver has a maximum limit of requests it can handle in parallel, this leads to an interesting question or performance and webserver throughput.

Is the maximum execution time of 30 seconds too large or too small?

In general: Yes, we recommend to decrease max_execution_time to the range of 5 or 10 seconds. But like so often, there is no one size fits all solution.

Why does everyone lean to increase max_execution_time though? If you search for this configuration on Google and YouTube you find posts that discuss exclusively how to increase it without talking about the implications.

The use-case is often that a single page starts to run into the execution time limit and the easiest option is to increase the global limit across all PHP processes and pages: Its just a quick configuration change after all.

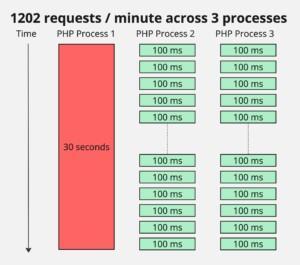

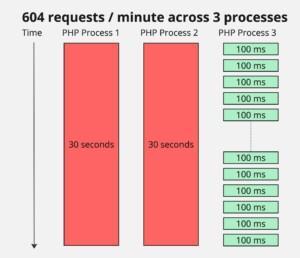

If your webserver is near its limit of concurrent requests, a script taking 30 seconds can block 300 fast requests that only take 100ms and lead to HTTP 503 errors sent from the webserver or queueing delays. The available throughput per minute decreases massively for your given amount of PHP processes if you allow long requests.

Impact of Slow Requests on Throughput

The following example shows the throughput difference for a hypothetical PHP setup with 3 parallel processes:

In the worst case a stampede of users accessing slow parts of your site clogs up the available PHP processes and other users get served with HTTP 503 Service unavailable errors.

These conflicting scenarios show that a differentiated view is propably the best approach by configuring the max_execution_time differently for different endpoints and request types.

Strategies to safely allow high max execution time scripts

There are a few strategies to address the competing constraints of decreasing the max_execution_time in general, and allowing some scripts to have longer run times.

- Set max_execution_time just for a single or few pages.

- Use multiple PHP-FPM pools with different configurations

- Implement a waiting list or a locking mechanism that restricts slow scripts to run at most once in parallel.

- Offload slow work to a message queue

Programmatic Configuration based on Request

The max_execution_time INI setting can be changed at PHP runtime, so it makes sense to have a central place in your code-base that determines its value based on the request data (URL, method, for example):

<?php

// Symfony Http Foundation based example, also possible through $_SERVER

use Symfony\Component\HttpFoundation\Request;

$request = Request::createFromGlobals();

ini_set('max_execution_time', 5);

if ($request->getMethod() === 'POST') {

ini_set('max_execution_time', 10);

}

if (str_starts_with($request->getPathInfo(), '/admin')) {

ini_set('max_execution_time', 15);

}

Example: max_execution_time on Tideways app

We recently came across this problem in Tideways, where a small number of relatively unimportant reporting requests jammed the many important short API endpoints that accept data.

So there is no single perfect value, instead we solved this problem by applying the following heuristics when configuring our execution timeouts:

- Read requests (GET, HEAD) now have a lower default timeout, because they usually don’t cause inconsistencies when they are aborted by a timeout fatal error and usually make up a large amount of the apps traffic.

- Write requests (POST, ..) default to a higher timeout, to avoid inconsistencies when the request is aborted during the execeution.

- We measured the latency of all endpoints, especially 95%, 99% percentiles, the maximum duration and the number of requests to get a feeling for what the best default values could be. Tideways itself collects these values over larger timespans and per endpoint.

- We increased the timeout for endpoints that can take longer than the default timeout as long as they are low traffic endpoints. Otherwise we need to optimize their performance.

- We decreased the timeout for endpoints that are fast, but have a very high share of requests. These requests could quickly jam the webserver resources when their performance decreases.

These are pretty basic heuristics. There are more sophisticated solutions when you need to handle more complex timeouting cases that:

- If you have requests that are often slow and are still executed in high numbers, then consider executing them in their own PHP FPM pool with their own max number of parallel requests. This way they don’t affect other requests when requested in high numbers.

- If you have endpoints that could write inconsistent data, but you want to limit their execution time to a low timeout anyways, consider writing your own timeout logic to have full control about potential cleanup.

- Often slowness is caused by external services (HTTP) or databases (MySQL), in which case we would solve the problem by specifying client side timeouts that can be gracefully handled.

I will write about each of them individually in the next weeks. If you are interested make sure to sign up for the newsletter to get updated when the posts are published.

Hungry for more insights on PHP performance, operations and debugging topics? Sign up for our newsletter.

Suffering from a slow web application and scratching your head as to why? Our PHP Profiler can help you out. Start a 14 day trial to get effortless performance insights from us, tailored to your application problems.